What Is Observability in Modern IT Systems?

What Is Observability in Modern IT Systems? Practical Guide for IT Leaders and Developers

Observability is the practice of instrumenting software systems so that their internal state can be inferred from external telemetry; it combines metrics, logs and traces to reveal how distributed systems behave under load or failure. By collecting and correlating high-fidelity telemetry, teams can detect anomalies, diagnose root causes, and improve reliability before user impact grows, making observability essential for cloud-native and microservice architectures. This guide explains the pillars of observability, contrasts observability with traditional monitoring, and maps the practical benefits that matter to engineering and business stakeholders. It also covers how AI augments visibility, which tools and standards enable effective telemetry pipelines, and a step-by-step roadmap for implementing observability as code with CI/CD and SRE practices. Along the way you will find concrete examples, EAV comparison tables, and actionable lists to help technical teams adopt full‑stack observability for production systems.

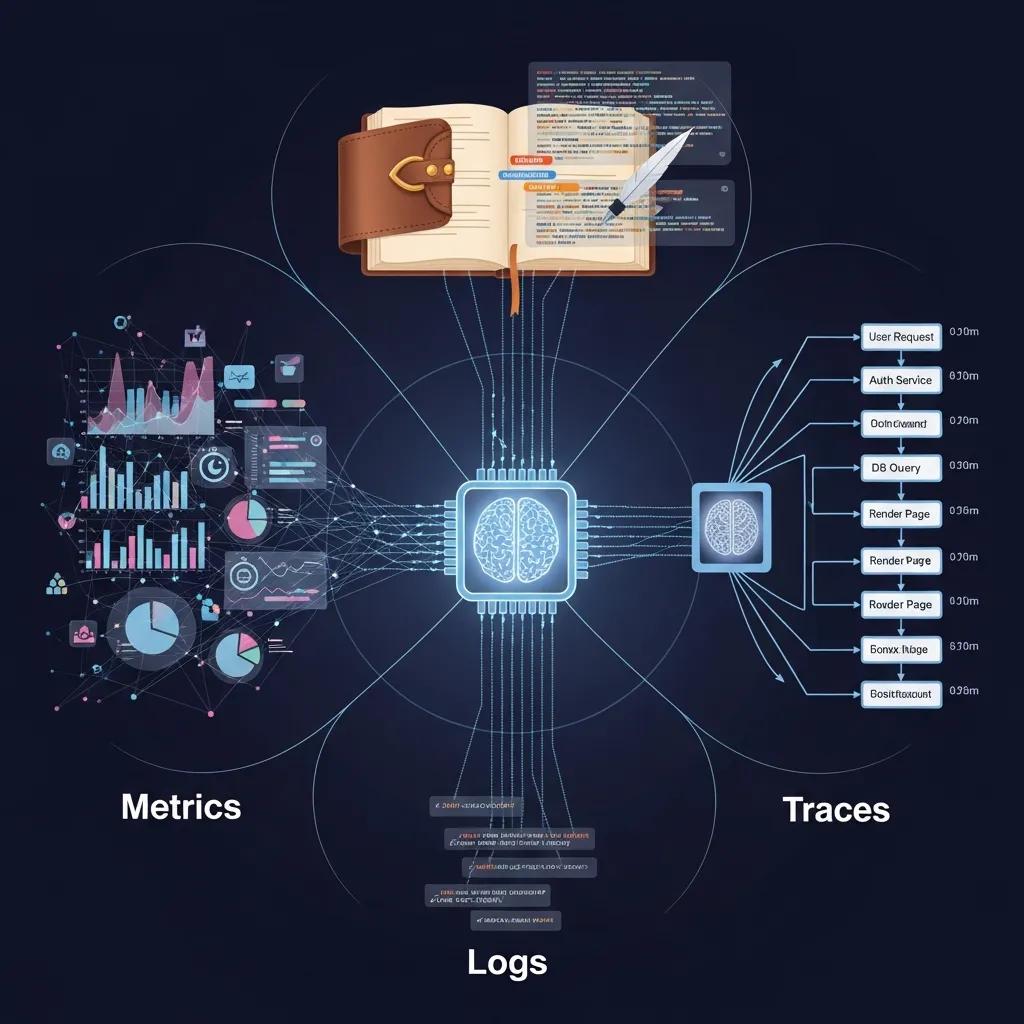

What are the Pillars of Observability: Metrics, Logs, and Traces?

Observability rests on three complementary pillars—metrics, logs and traces—each providing different signal types that combine to reveal system behaviour, enabling faster diagnosis and more precise remediation. Metrics offer quantified, time-series views of system health; logs provide event-level context and detailed payloads; traces show end-to-end request flows across services so you can follow causality through distributed systems. Together these pillars reduce mean time to resolution and support SRE practices by turning raw telemetry into actionable intelligence. The following table summarises each pillar, how data is collected, and example KPIs to make selection and instrumentation decisions easier.

Different telemetry pillars support distinct collection methods, retention strategies and KPIs.

| Telemetry Pillar | Collection Method | Example KPIs |

|---|---|---|

| Metrics | Time-series sampling via exporters/agents (counters, gauges, histograms) | CPU usage, request latency P95, error rate |

| Logs | Structured log emissions, aggregation through collectors | Error counts, exception traces, user session events |

| Traces | Distributed tracing with spans and context propagation | End-to-end latency, span duration, service call graph |

This comparison highlights that metrics are ideal for trend detection, logs for contextual investigation, and traces for causal analysis; combining them enables high-fidelity observability that supports both SRE and product-level KPIs.

Further research emphasizes the critical role of effectively correlating these diverse data sources for robust observability in complex microservices architectures.

Metrics, Logs, Traces: Unified Observability for Microservices

focusing on metrics, logs, and traces as complementary data sources. The challenge lies in correlating these signals effectively; highlight anti-patterns (like ignoring distributed traces or over-collecting logs) and best practices for building robust observability into microservices.

Metrics, Logs, and

Traces: A Unified Approach to Observability in Microservices, P Bhosale, 2022

Metrics: What they measure, types, and how to use them

Metrics quantify system behaviour as numerical time-series and are typically collected as counters, gauges, or histograms; these types support aggregation and statistical analysis for performance and capacity planning. Counters accumulate events (e.g., requests served), gauges measure instantaneous values (e.g., memory usage), and histograms capture latency distributions that underpin P95/P99 calculations; correct choice affects cardinality and storage costs. Instrumentation best practice includes adding meaningful labels, limiting high-cardinality dimensions, and choosing appropriate scrape intervals to balance resolution and cost. Effective metrics usage feeds SLOs and alerting rules, and when paired with traces and logs it accelerates root-cause analysis by pointing investigators to the right service and time window.

Logs and Traces: Event data and distributed tracing for end-to-end visibility

Structured logs encode event data as key-value fields that make aggregation and searching efficient, while unstructured logs remain useful for free-form diagnostics; consistent timestamping and inclusion of correlation IDs are essential for joining logs to traces. Distributed tracing captures spans that represent work units, propagates trace context across service boundaries, and reconstructs the causal path of a request so teams can see where latency or errors accumulate. Correlating logs and traces—by including trace IDs in log fields—gives investigators immediate access to both high-level flow and low-level event details, dramatically reducing the time spent hunting for root causes during incidents. OpenTelemetry and tracing systems like Jaeger or commercial APMs standardise instrumentation so telemetry from microservices, serverless functions and edge components can be correlated reliably.

Observability vs Monitoring: Key Differences and Why It Matters?

Observability and monitoring share goals but differ in scope: monitoring detects known failure modes via predefined metrics and alerts, while observability enables teams to infer unknown problems by exposing internal state through rich telemetry. Monitoring answers “Is the system within expected thresholds?” using configured rules and dashboards; observability answers “Why is the system behaving this way?” by supplying data that supports hypothesis-driven investigation. This distinction matters because modern distributed systems inevitably produce novel failure modes that static alerts cannot anticipate, and observability reduces the time and uncertainty involved in diagnosing such emergent issues. The short comparison below clarifies when to rely on monitoring, when to invest in observability, and how both fit into a resilient operations practice.

When choosing between monitoring and observability, consider intended outcomes and investigative requirements.

- Monitoring: Alerts and dashboards for known conditions; proactive for known SLIs and thresholds.

- Observability: High-cardinality telemetry and correlation to infer unknowns; essential for complex, distributed systems.

- Combined approach: Use monitoring for immediate detection and observability for deep diagnosis and prevention.

Using both makes incident response more reliable: monitoring triggers the alert and observability provides the evidence to resolve it quickly, which shortens MTTR and improves service reliability.

Definitions, differences, and the 'unknown unknowns' concept

Monitoring is the practice of measuring system health against expected values using predefined metrics and thresholds, which is effective for “known unknowns” where you already know what to watch. Observability focuses on collecting rich telemetry so engineers can probe the system and infer states they did not anticipate—addressing “unknown unknowns” that emerge from complex interactions in microservices, container orchestration, or external dependencies. A practical scenario illustrates this: an alert for increased latency (monitoring) is escalated, but observability provides traces showing a misconfigured downstream service or a bad database query as the true root cause. Appreciating this difference shifts investment from building more alerts to improving instrumentation, correlation and exploratory tooling.

Impact on incident response and system reliability

When observability is mature, incident response workflows change from blind firefighting to targeted investigation: on-call engineers use traces to localise the problem, logs to read context, and metrics to quantify impact and validate fixes. Observable systems reduce blast radius by making it easier to identify compensating actions, rollbacks or configuration fixes without broad, disruptive interventions. Measurable outcomes include shorter MTTR, fewer cascading failures, and improved SLO compliance, which translate into better customer experience and lower operational costs. Embedding these practices into post-incident reviews and runbooks ensures continuous improvement and a feedback loop between production signals and engineering work.

What are the Benefits of Observability in Modern IT Systems?

Observability delivers technical and business benefits by converting telemetry into reproducible insights that inform engineering decisions and product outcomes; it enables faster diagnosis, performance optimisation, and data-driven capacity planning. The benefits map directly to KPIs such as MTTR, availability, latency percentiles and operational cost per request, making observability an investment that can be measured against business impact. The table below links core benefits to measurable metrics and business outcomes to help stakeholders prioritise observability workstreams and funding.

Below is a practical mapping from observability benefits to metrics and business impact to aid stakeholder discussions.

| Benefit | Metric / Measure | Business Impact |

|---|---|---|

| Faster RCA & reduced MTTR | MTTR minutes/hours | Lower outage cost; faster customer recovery |

| Improved performance | Latency P95/P99, throughput | Better UX and conversion; reduced churn |

| Proactive fault detection | Anomalies detected pre-impact | Reduced incidents and SLA breaches |

| Smarter capacity planning | Resource utilisation, forecasting error | Cost optimisation and predictable scaling |

This mapping makes the case for observability investments by connecting technical efforts to dollar-impacting outcomes and prioritised KPIs.

Faster root-cause analysis and reduced MTTR

Combining metrics, logs and traces enables engineers to move from alert to resolution with less guesswork: metrics narrow the time and subsystem, traces reveal the request path, and logs provide the payload-level evidence needed for a fix. Practical improvements include automated drilldowns from dashboards into traces, correlation IDs that link user sessions across services, and playbooks that map common symptom patterns to remediation steps. Organisations that adopt these practices commonly report material MTTR reductions by eliminating repetitive investigative steps in on-call rotations. Investing in tooling and runbooks that make telemetry actionable is therefore central to reducing the business impact of incidents.

Improved reliability, performance, and user experience

Observability supports performance optimisation cycles: detect anomalies, diagnose root causes, implement fixes, and measure the outcome against SLIs and SLOs to close the loop. By focusing on user-centric metrics such as page load time or API latency, teams can prioritise fixes that deliver measurable user experience improvements and higher conversion rates. Continuous observability also enables capacity planning that aligns infrastructure costs with demand patterns, reducing over-provisioning while maintaining reliability. These feedback-driven improvements create sustainable product quality gains and stronger alignment between engineering work and business objectives.

How Does AI in Observability Enhance Visibility?

AI augments observability by automating anomaly detection, surfacing probable root causes, and enabling natural language querying across telemetry so teams can find answers faster and scale expertise. Machine learning models can detect subtle deviations in high-dimensional telemetry that static thresholds miss, forecast capacity needs, and prioritise alerts by likely impact. Natural language and generative AI can summarise incident timelines, suggest remediation steps and produce human-readable explanations of complex causal graphs, improving time-to-insight for on-call engineers and product teams. For teams producing technical content or vendor documentation, AI-driven semantic research and content automation tools can accelerate the creation of clear observability documentation and developer guides that increase adoption.

Search Atlas positions itself as an AI-powered SEO automation platform that supports technical content and developer outreach by turning semantic research and first-party data into optimised documentation and marketing content. Products such as OTTO SEO provide AI-powered website optimisation, Content Genius enhances content quality through semantic enrichment, and GSC Insights integrates first-party search data to inform topical strategy. These capabilities help observability vendors and engineering teams produce clearer, discoverable content about tooling, standards and integration patterns to accelerate adoption and developer onboarding.

Anomaly detection and predictive analytics

AI-based anomaly detection uses supervised and unsupervised approaches to find deviations across metrics, logs and traces, flagging events that traditional threshold-based monitors miss and reducing alert fatigue by prioritising signals. Forecasting models project capacity needs and detect early indicators of degradation, enabling preemptive scaling or mitigation actions before customer impact occurs. Integration with alerting systems and runbooks means detected anomalies can trigger automated diagnostics—capturing relevant traces and logs for rapid handoff to engineers. Combining predictive analytics with SRE practices turns observability into a proactive system that reduces incident frequency and operational risk.

This proactive shift, driven by AI, transforms observability from a reactive discipline into an automated and predictive capability.

AI-Powered Observability: Proactive Anomaly Detection

observability practices, such as log and metric collection, dashboard visualization, and performance traceability, enhances the ability to detect and diagnose issues. The integration of AI in anomaly detection, root cause analysis, and predictive insights transforms observability from a reactive to a proactive and automated discipline.

AI-Powered Observability: A Journey from Reactive to Proactive, Predictive, and

Automated, R Manchana, 2024

Governance considerations include validating model outputs, logging AI recommendations for auditability, and maintaining traceability between AI-generated insights and source telemetry to preserve trust.

AI-powered insights and natural language querying

Natural language interfaces let engineers query telemetry using plain language, for example asking for “top services by P99 latency in the last 30 minutes,” and receive concise answers or suggested diagnostics, which lowers the barrier for non-experts to explore system behaviour. Generative models can summarise incident timelines, propose likely root causes by correlating anomalies across telemetry, and draft initial remediation steps—while emphasising human-in-the-loop verification to avoid over-reliance on automated suggestions. Governance considerations include validating model outputs, logging AI recommendations for auditability, and maintaining traceability between AI-generated insights and source telemetry to preserve trust. These capabilities accelerate investigation workflows and democratise access to operational knowledge.

Which Tools, Platforms and Standards Enable Observability?

The observability ecosystem includes open-source projects, commercial APMs and standards that simplify instrumentation and data exchange; selecting the right mix depends on scale, required enterprise features and tolerance for vendor lock-in. Open standards like OpenTelemetry unify telemetry formats and context propagation, enabling collectors and exporters to feed diverse back-ends, while tools such as Prometheus and Grafana provide robust OSS monitoring and visualisation. Commercial platforms and APMs offer integrated analytics, long-term retention and enterprise support for organisations that need turnkey solutions. The table below helps compare representative tools by category and primary use-case to inform architecture choices.

This table helps teams quickly scan tool categories, provenance and core strengths to guide initial stack decisions.

| Tool | Category | Primary Use-case / Strength |

|---|---|---|

| Prometheus | Open-source | Time-series metrics, alerting for cloud-native systems |

| Grafana | Open-source | Visualisation and unified dashboards across data sources |

| Jaeger | Open-source | Distributed tracing and trace storage for microservices |

| Splunk | Commercial | Log analytics, enterprise search and compliance |

| New Relic | Commercial | Full-stack APM with integrated analytics and SLO tooling |

Selecting a hybrid stack often balances the flexibility and cost-efficiency of open-source with the operational convenience and enterprise features of commercial offerings.

Open-source vs commercial tools

Open-source tools deliver flexibility, community-driven integrations and lower licensing costs, making them attractive for engineering-led organisations that can operate and extend the stack. Commercial offerings provide unified experiences, curated analytics, SLAs and support that reduce operational overhead for large enterprises or teams that prioritise time-to-value. A hybrid approach—instrumenting with OpenTelemetry, collecting metrics with Prometheus, visualising in Grafana, and forwarding to a commercial analytics tier—lets teams standardise telemetry while retaining optional enterprise capabilities. Considerations include data retention needs, scale, compliance requirements, and the organisation’s capacity to maintain self-hosted infrastructure.

Open standards, data models, and integration

OpenTelemetry is the de-facto standard for instrumentation and context propagation, providing SDKs, semantic conventions and exporter patterns that allow telemetry to flow from applications to collectors and storage back-ends. A checklist for integration includes: instrumenting code with OpenTelemetry SDKs, deploying collectors to centralise telemetry, configuring exporters for chosen back-ends, and validating context propagation across services to ensure trace continuity. Adoption of open standards simplifies switching back-ends and combining OSS plus commercial tools, which protects teams from vendor lock-in and enables richer cross-tool correlation. Good data models and consistent semantic conventions are the foundation that makes metrics, logs and traces interoperable.

When observability vendors and product teams publish documentation, they also need discoverable, semantically-rich content to reach developers; tools like OTTO SEO, Content Genius and GSC Insights can help create optimised docs and integration guides so that instrumentation patterns and examples are easier to find and adopt by engineering audiences.

How Do You Implement Observability: Best Practices and Roadmap?

Implementing observability requires a roadmap that treats instrumentation as code, integrates telemetry checks into CI/CD, and establishes SRE governance with SLIs, SLOs and error budgets. Start with a minimal instrumentation plan that covers critical user journeys and core services, then iterate by expanding telemetry coverage, validating data quality, and aligning alerts with SLOs. Observability as Code practices ensure instrumentation changes are versioned, reviewed and tested alongside application code, reducing regression risks. The roadmap below outlines phased steps to make observability a repeatable, auditable capability for development and operations teams.

An actionable roadmap helps teams balance quick wins with sustainable, testable instrumentation practices.

- Define SLIs/SLOs: Identify user-centric SLIs, set SLOs and error budgets.

- Instrument critical paths: Add metrics, structured logs and traces for core services.

- Deploy collectors & storage: Configure OpenTelemetry collectors and back-end retention.

- Integrate into CI/CD: Add telemetry tests and instrumentation reviews to pipelines.

- Establish SRE governance: Create runbooks, on-call rotations, and post-incident reviews.

Following this phased approach ensures observability investments deliver measurable reliability improvements and operational resilience.

Instrumentation and Observability as Code

Treat instrumentation like any other code artifact: store SDK configuration and instrumentation snippets in version control, run unit and integration tests that validate metric emission and trace continuity, and require code review for changes that affect telemetry. Observability as Code practices include automated tests that simulate request flows to ensure spans are generated with correct attributes, schemas to validate log formats, and CI gates that prevent deployments when telemetry regressions are detected. This discipline reduces accidental loss of visibility due to refactors, ensures consistent semantic conventions, and enables teams to evolve instrumentation in a controlled, auditable manner. Ensuring telemetry quality through tests shortens feedback loops and improves trust in the data used for incident response.

This approach aligns with the concept of “Shift-Left Observability,” advocating for the integration of observability methods earlier in the software development lifecycle to catch issues proactively.

Shift-Left Observability: Early Insights in SDLC

This paper explores the newly proposed concept of “Shift-Left Observability,” which integrates observability methods earlier in the software development lifeline to combine visibility and insight directly into the code, build, and testing phases. Adopting a shift-left method allows teams to detect problems early on, speed up debugging efforts, and increase developer, testers, and operations staff communication.

Shift-Left Observability: Embedding Insights from Code to Production, H Allam, 2024

CI/CD integration and SRE governance

Integrating observability into CI/CD means adding telemetry-related gates: unit tests for instrumentation, integration tests that assert SLI-like behaviours, and canary analyses that monitor telemetry during rollout to detect regressions early. SRE governance formalises responsibilities: define who owns SLIs, who reviews instrumentation changes, and how error budgets influence release velocity. Runbooks should link alerts to diagnostic queries and remediation steps so on-call engineers can act quickly and consistently. By embedding these controls into the delivery pipeline and organisational processes, teams make observability a core part of software delivery rather than an afterthought, which improves reliability and developer productivity.